Big Minds: How some of the most successful investors are investing in AI

Read and listen to what Brad Gerstner (Altimeter), Andrew Homan (Maverick Silicon), Josh Wolfe (Lux Capital), and Chase Coleman (Tiger Global) have to say

I think we’re in … inning one or two of a decade-plus long buildout that will transform compute, will transform all of our lives, transform our businesses. —Brad Gerstner

We think that semiconductor layer is going to capture a very, very significant amount of the economic rent over this next 10 years. —Andrew Homan

I’m convinced that 50% of your inference… will be on device. —Josh Wolfe

On October 15, Robin Hood, New York City’s largest local poverty-fighting philanthropy, hosted its 13th annual J.P. Morgan / Robin Hood Investors Conference. I had the good fortune of working with a couple of the founders of Robin Hood at the hedge fund I was at, one for quite a long time. It’s hard to overstate the positive impact the organization has had on those in need. And this hasn’t been limited to New York City. Their style of philanthropy, the principles they espouse, and the best practices they’ve developed have helped shape other organizations and efforts globally. They are the best of us.

Of note this year was the AI investor panel exploring the convergence of artificial intelligence and investment opportunity, featuring Brad Gerstner (Altimeter), Andrew Homan (Maverick Silicon), and Josh Wolfe (Lux Capital), and moderated by Chase Coleman (Tiger Global).

In a roughly thirty-minute session, these esteemed investors shared their insights on how they are approaching AI as an asset class. The conversation covered the capital cycle driving AI’s buildout, the layers of value creation across chips, models, and applications, and the emerging winners as compute, memory, and data converge into the next great investment opportunities.

To date, AInvestor has focused largely on the process of investing. With this note, we begin to explore where top investors look to capture the value created from building, running, supporting, and using AI. This is what I refer to as “AI as an asset class.”

The rest of the note is structured as follows:

Learnings

Transcript

Frequently Asked Questions (FAQs)

Mind Map

Read or skim at your pleasure. Of course, I hope you’ll take in everything below, but I also encourage you to click through and watch the session in full. You’re in for a treat.

Learnings

This has been a fun note to work on. Though I don’t personally know any of the folks on the panel, I’ve come to trust my ability to recognize those who know their craft. There is only so much that can be fit into thirty minutes of banter and Q&A, but there was enough here to get my juices flowing.

The core insights that jump out are:

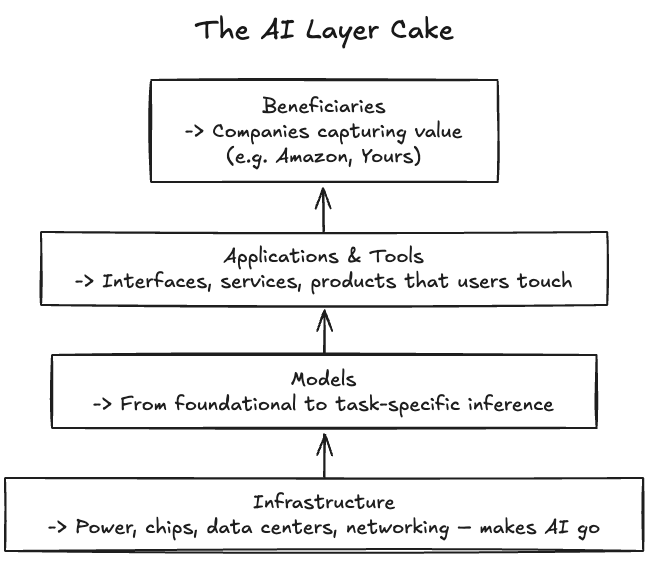

AI Layer Cake

I’ve had the good fortune to work with some iconic investors, and others that I’d say are equally skilled. While assets and approaches have differed wildly, each has had a framing that brought structure and definition to the otherwise infinite set of options facing investors.

In introducing the AI Layer Cake, Andrew Homan acknowledges different investors have their own definitions and recipes. He thinks about it here as: chips → models → applications and tools.

Adding a couple of layers, my cake looks like:

Infrastructure: This includes everything that goes into making AI come alive, from power to chips to everything else that makes data centers go hum.

Models: I consider these all things that take inputs and process them into outputs. They range from the biggest foundational models targeting general intelligence to the smallest, task-specific.

Applications and Tools: These are the products and services that manifest technology and data into outputs and outcomes. In the best of circumstances, this is where value is created.

Beneficiaries: These are companies that capture the value AI helps create. In the early days, much of this appears to be coming in the form of cost savings and efficiency; increasingly, we should see AI play more of a role in expanding revenue opportunities. Scott Galloway, of Prof G fame, presents Amazon as Exhibit 1A as the company further automates its fulfillment and distribution efforts. You can read Scott’s arguments here.

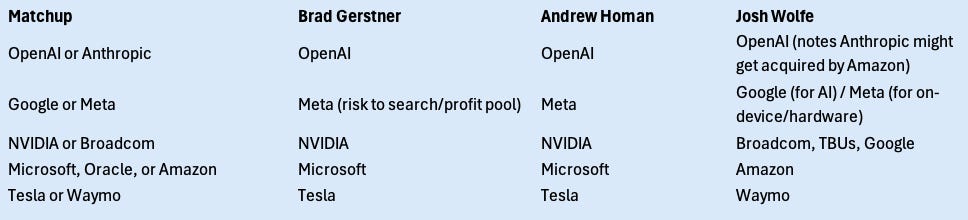

Benefits of Scale Overcome Innovator’s Dilemma

Common wisdom holds that incumbents inevitably fail to adapt for two reasons. First, because innovating would cannibalize existing profit pools they have so diligently worked to build and protect. Second, success leads to size, which leads to bureaucracy, which in turn suffocates the ability to react.

As Brad Gerstner put it:

“We learned in business school that elephants can’t dance—that technology companies get big and get disintermediated. What’s happened over the last 15 years is the exact opposite. Scale has led to bigger advantages.”

The modern AI stack, however, rewards scale rather than punishes it. And beyond access to data, compute, talent, and distribution, an often-overlooked superpower is the ability of Google, Microsoft, Amazon, and Meta to be their own first-and-best customers. The immediacy and intensity of the internal feedback accelerates development while overpowering the traditional sources of friction that usually come with size.

User Interfaces Get Personal

The pathways and mechanisms by which we engage with AI are going to optimize for removing friction that impedes getting the necessary data and insights into and out of these systems.

In addition to the fancy Ray-Bans, Josh Wolfe highlights Meta’s push into neural interfaces, what he calls “life-cording, where every part of your life is going to be recorded 24/7.”

I’ll admit my mind went straight to a Black Mirror episode (c. 2011, if you can believe it), which had a dystopian gloss to it, but I think he is on point. Increasingly, the limiting factor to AI being useful is not just more data, but the right data, where “right” means that which is most relevant to you. In other words, your data.

Compute Gets Personal

This follows naturally from the ability to collect massive quantities of personal (and sensitive) data. Perhaps not always true, but generally, it is more expensive and less secure to cycle data from client to server and back again.

Inference will increasingly be driven to the supercomputers commonly known as our laptops and cellphones. Josh Wolfe puts the number at roughly half of our AI use being on-device.

Memory Is Personal

I’m taking editorial prerogative in slipping this in here as a bridge to some of the tangible investment ideas offered by the panel. If data is to be collected and processed on-device, it should be stored there, too. And when I say data, I include the questions, context, and output generated. That will be a lot of data.

Memory Gets Rerated

Few things make an investor salivate more than redefining what a company does or how it should be valued. Unlike compute, memory is a fairly commoditized product with commensurately low margins. Historically, companies in this sector ride the tides of common economic cycles, interrupted by spasms of boom and busts triggered by major hardware or platform transitions (see: PCs, cell phones, and the cloud).

As outlined, AI fits the bill for kicking off a new boom cycle for memory. The question becomes whether or not this represents a new paradigm for valuing memory companies. Both Homan and Wolfe would suggest so.

Andrew Homan,

“Memory is the one piece of the bill of materials that continues to grow each generation.”

Josh Wolfe,

[…] I do believe that the memory players have that same dynamic that, 11 years ago, people were like, ‘Oh, GPUs are just tightly coupled to the gaming PS5 console wars, Xbox, etc.’ And these are commodity memory chips, but I think they’re going to be ascendant, and I think it’s going to crack the narrative that we need to invest these tens of billions of dollars into data centers.

Investment read-through

Bonus advice

It’s too early for quantum.

J.P. Morgan Robinhood Investors Conference

Wednesday, October 15, 2025

This conversation has been edited for clarity and length.

Chase Coleman: Thank you. It’s a pleasure to be here with all of you today, supporting Robinhood and the incredible work they do to combat poverty in New York City. I’m looking forward to a good discussion between three incredibly thoughtful investors on a topic we all care about: AI.

To frame it, AI capital expenditures are contributing roughly 1% to U.S. GDP growth—about half of total GDP growth. ChatGPT is the fastest-growing consumer application of all time, expanding three times faster than Meta, Google, or AWS at similar scale. Anthropic may have added more than half of all new annual recurring revenue in the SaaS industry during the first half of this year.

We’re seeing multi-tens and hundreds of billions in infrastructure investments almost weekly. We’re at the outset of reasoning models, video models like Sora and Google’s Nano Banana and VO3, and intelligent agents that should drive sustained token growth. As we like to say in this industry—it’s on.

Brad, let’s start with you. This is Q4 2025, and everyone’s talking about AI infrastructure now. Help us extend the horizon: what applications and buildout trends do you expect over the next several years?

The Scale of AI Investment

Brad Gerstner: It’s great to be here—and for such a good cause. As I said to Andrew Ross Sorkin, who was hyperventilating about bubbles this morning on CNBC, rarely do you see bubbles when everyone is already talking about them and when the hit book of the day is about the 1929 crash. NVIDIA’s trading at 27 times earnings—it’s not frothy.

Jensen Huang told me last week that we’ll see $3–4 trillion in compute buildout over the next four to five years. That’s roughly ten times the Manhattan Project—$4 billion then, about $400 billion GDP-adjusted today. We’re doing $4 trillion, all privately funded. That’s a massive economic tailwind.

Why such scale? It’s not just generative AI. Every business is moving from general-purpose compute to accelerated compute. Without that shift, there’s no TikTok, Reels, or instant Google answers. I asked Jensen about the risk of a glut. He said, “No chance in the next two to three years.” The hyperscalers are driving this. Sam Altman’s flurry of deals? They’re frameworks for the next decade, not obligations.

We’re in inning one or two of a decade-plus buildout that will transform computing, business, and daily life. There’ll be volatility—NVIDIA’s had two 25% drawdowns this year—but the direction is clear.

The AI Layer Cake

Chase Coleman: Andrew, you’ve talked about the “AI layer cake.” Walk us through that.

Andrew Homan: The AI layer cake has three layers. Bottom: semiconductors. Middle: large language models like OpenAI and Anthropic. Top: applications and tools.

We believe the semiconductor layer will capture a very significant share of the economic rent over the next decade. That’s a major shift from the 2010–2021 era when software investing rode two tailwinds—the move to cloud and the rise of SaaS. Delivering solutions now requires far more compute. That’s why we’re most excited about chips.

Five-Year Psychological Bias

Chase Coleman: Josh, you’ve written about the “five-year psychological bias.” Explain what that means and how it applies to AI.

Josh Wolfe: First off, Brad’s the best-dressed guy on stage. Normally he’s in a black T-shirt like me—so his suit is a contra indicator. If he’s in a tux next year, we’ve hit the top.

The five-year bias means everyone wants to be invested today where they should have been five years ago. Our job as venture capitalists is to anticipate where people will want to be in three to five years—betting on people and technology.

I’ll give you a parallel. In 2015, I invested in Zoox, a self-driving company later acquired by Amazon. Early on, engineers were running thousands of simulations on chips not yet released—NVIDIA chips. Back then, NVIDIA was worth $15 billion, Intel $150 billion. I called it the pair trade of the century. Today NVIDIA’s worth $4.5 trillion.

I feel the same way now about memory players. Consensus says data centers will scale forever to benefit NVIDIA and AMD. I think 50% of inference will be on-device. A paper a year ago showed you can run large language models on-device using Flash, NAND, and other memory technologies.

The winners: SK Hynix, Samsung, and Micron. SK Hynix is the most nimble, with 60% high-bandwidth memory market share. Samsung’s slower; Micron faces U.S. export limits. Memory chips are going to be ascendant—challenging the assumption that we must spend tens of billions on new data centers.

CapEx booms drive innovation, but they can also create overbuild risk. Two-dimensional AI—voice, video, text, code—is saturated. The next wave is three-dimensional AI: biology and robotics. Those fields lack dense data repositories, so their data is scarce and valuable.

Lastly, all the big model players are overspending. Each wants 100% of user share but has about 20%, spending 500% on CapEx.

Lightning Round

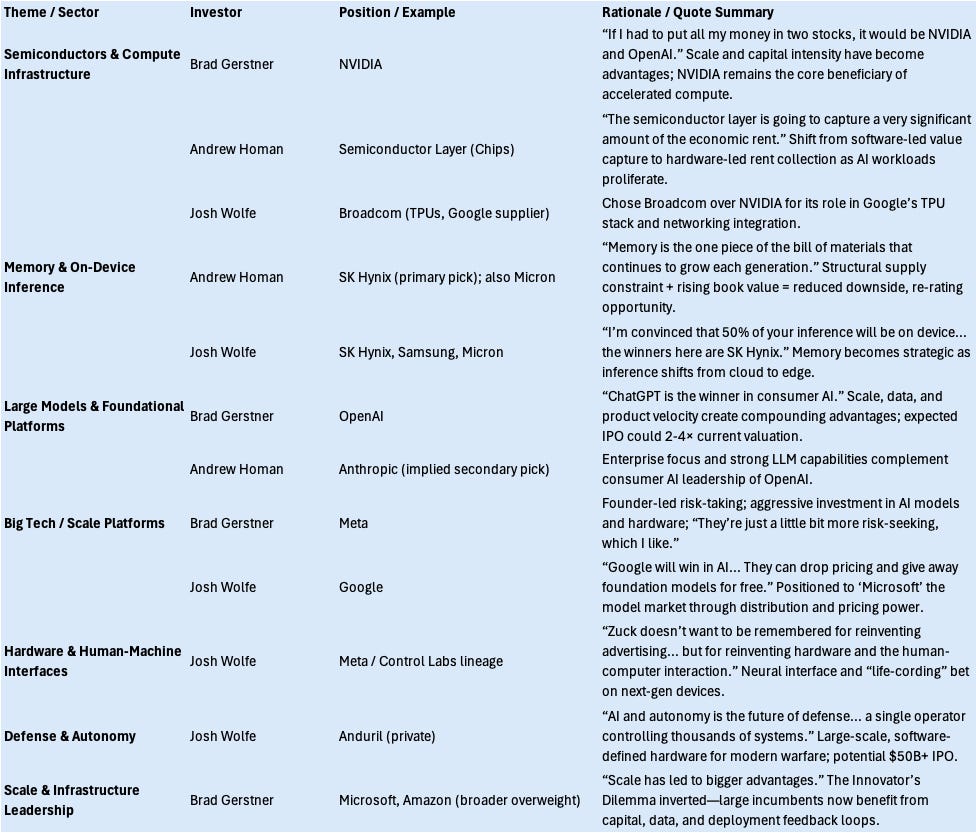

Chase Coleman: Let’s do a lightning round. OpenAI or Anthropic?

Brad Gerstner: OpenAI.

Andrew Homan: Same.

Josh Wolfe: OpenAI. It’s worth $500 billion now and could hit $2 trillion. Anthropic likely gets acquired by Amazon.

Chase Coleman: Google or Meta?

Brad Gerstner: Meta. Google’s profit pool—those ten blue links—is fundamentally at odds with AI. None of our kids use that anymore. I still own Google, but over five to seven years, Meta’s founder-led risk-taking gives it the edge.

Andrew Homan: Agreed—Meta.

Josh Wolfe: Google will win in AI; Meta will win on devices. We sold Control Labs to Meta—it made neural wristbands that read muscle signals to replace keyboards and remotes. Zuck wants to reinvent human-computer interaction to route around iOS and Android.

I call it “life-cording”—recording your life 24/7. Younger generations will accept it; older ones will be horrified. But it will unlock enormous AI insights. Meta wins that race.

Chase Coleman: NVIDIA or Broadcom?

Brad Gerstner: NVIDIA.

Andrew Homan: NVIDIA.

Josh Wolfe: Broadcom—for TPUs and Google.

Chase Coleman: Microsoft, Oracle, or Amazon?

Brad Gerstner: Microsoft.

Andrew Homan: Microsoft.

Josh Wolfe: Amazon.

Chase Coleman: Tesla or Waymo?

Brad Gerstner: Tesla.

Andrew Homan: Tesla.

Josh Wolfe: Waymo.

Favorite AI Beneficiaries

Chase Coleman: Name a favorite AI beneficiary—public or private.

Andrew Homan: SK Hynix. Every new NVIDIA or Google chip generation uses more memory. SK Hynix dominates high-bandwidth memory and faces long-term supply limits, keeping pricing strong. Historically, memory cycles were brutal, but this one looks different. Book value’s growing fast—up 50% by 2026—so downside risk is shrinking.

Brad Gerstner: Everyone hunts for the undiscovered idea, but sometimes the best one is the obvious one. In 2006 it was Google; now it’s NVIDIA and OpenAI. The Magnificent Seven are up 10× while the S&P ex-Mag Seven is up 1.5×. Scale has become an advantage, especially in AI where compute and data scale compound power.

Josh Wolfe: I’m long Google—it’ll “Microsoft” the foundation model market by dropping prices and giving access away. I’m also long SK Hynix and Anduril, an AI defense company likely to IPO at $50 billion.

Short? The quantum and modular nuclear reactor baskets. Quantum firms trade at absurd valuations with minimal revenue. Nuclear’s future is large-scale fission, not modular startups.

Andrew Homan: I’m less bearish on quantum. Retail loves those stocks—about $100 billion in aggregate market cap. NVIDIA’s taking quantum more seriously, so it’s worth watching.

Winners and Losers in the AI Shift

Brad Gerstner: I’m with Josh—it’s tough to short meme stocks when rates are falling. But look at how value creation shifted. Google was the toll keeper of the internet, sending users elsewhere and collecting a fee. ChatGPT’s model is the opposite: keep users inside and give them answers directly.

That’s a major problem for intermediaries—Expedia, Booking, anyone collecting a 15–20% toll. Agent commerce, like Walmart’s new assistant, will push consumers toward direct brand relationships. Unless legacy platforms make themselves the default assistant channel, their traffic will fall.

Andrew Homan: Intelligence is moving to the edge. Devices are getting smarter. Qualcomm’s okay but may need acquisitions to keep pace.

Even in advanced tech firms, something as simple as getting a presentation on screen can take ten minutes. That’ll become instantaneous as intelligence moves into every device—phones, PCs, robots, autonomous systems. We’re just in the first inning.

Applications and Agents

Chase Coleman: Brad, what excites you most on the application layer?

Brad Gerstner: Everything’s getting rebuilt. The biggest app in history already exists—ChatGPT. Every internet company that used to do website optimization now asks, “How do I build an agent that interacts with the super-agent?” We’re entering a world of agent networks acting on our behalf.

Capitalism and Inclusion in the AI Age

Chase Coleman: You’ve also worked on expanding access to capitalism through Invest America. Tell us about that.

Brad Gerstner: Capitalism fails if 60% of people don’t have compounding assets. The Invest America Act, which passed this year, creates “Trump accounts”—prosperity accounts for every child at birth, seeded with $1,000 in the S&P 500.

Sixty-five million children qualify now. Starting in 2026, every child born in the U.S. will receive one. Companies and parents will contribute too. Sign-ups begin in December. It’s how we get everyone on Team America, especially as AI disruption accelerates. If people feel ownership in the system, capitalism will endure.

Chase Coleman: We could keep going, but our time is up. Thanks to our panelists and to Robin Hood for bringing us together.

FAQs

The following summarizes the discussions and insights regarding the AI investment landscape, drawing from the J.P. Morgan Robinhood Investors Conference Transcript featuring Brad Gerstner (Altimeter Capital), Andrew Homan (Maverick Silicon), and Josh Wolfe (Lux Capital), moderated by Chase Coleman (Tiger Global). Note that I have used Google’s NotebookLM to generate this.

Market Growth and Sentiment

Q1: What is the current scale and growth rate of the AI industry?

The growth metrics are immense:

AI CapEx is contributing approximately 1% to US GDP growth, which represents half of US GDP growth.

ChatGPT is the fastest-growing consumer application of all time, growing three times the rate of Meta, Google, and AWS when they were at a similar scale.

Anthropic added more than half of the net new Annual Recurring Revenue (ARR) of the SaaS industry in the first half of this year.

The industry is currently seeing multi-tens and hundreds of billions of dollar infrastructure investments on an almost weekly basis.

Q2: Are we currently experiencing an AI bubble?

Panelists expressed skepticism that the market is currently in a bubble. Brad Gerstner noted that rarely do bubbles occur when everyone is talking about them, or when the hit book of the day is about the stock market crash of ‘29. He also pointed out that NVIDIA is trading at 27 times earnings.

Q3: What is the long-term outlook for the necessary compute buildout?

The expected scale of the compute buildout is massive and long-term:

Jen-Sen Huang projects $3 to $4 trillion of compute buildout over the next four to five years.

To contextualize this, this buildout is roughly 10 times the Manhattan Project (which was $4 billion, inflation adjusted to $40 billion, or GDP adjusted to about $400 billion). This $4 trillion is being funded privately and is a massive tailwind for the economy.

This buildout is required because all businesses in America are moving from general purpose compute to accelerated compute.

The industry is believed to be in “inning one or two of a decade-plus long buildout“.

Q4: Is there a risk of a “glut” in compute capacity soon?

Jensen Huang indicated that there is “no chance in the next two to three years” of a glut. This is because in the near term, only the hyper-scalers are paying for this massive investment.

Investment Strategy and Value Capture

Q5: How is the AI industry structured, and which part captures the most value?

Andrew Homan describes the industry using the AI layer cake analogy, which consists of three layers:

Bottom layer: Chips (semiconductors).

Middle layer: Large Language Models (LLMs), such as OpenAI and Anthropic.

Top layer: Applications and Tools.

Panelists believe the semiconductor layer is going to capture a very, very significant amount of the economic rent over the next 10 years. This differs from the playbook that worked from the Global Financial Crisis through 2021, which favored software and the SaaS business model.

Q6: What is the “five-year psychological bias”?

The bias is that “everybody wants to be invested today where they should have been five years ago”. The role of venture capitalists is to anticipate where people will want to be invested in the next three, four, or five years.

Q7: Where is AI inference expected to be conducted, and what companies benefit from this view?

The consensus view is that massive data centers and H100 clusters will handle inference, benefiting hyper-scalers and chip players like NVIDIA and AMD. However, Josh Wolfe is convinced that 50% of inference will be on device.

Companies expected to benefit from this shift to on-device inference are the memory players:

SK Hynix: Considered the most nimble on the Korean side, they have 60% of the NAND Flash market share. They are benefiting from attach rates on high bandwidth memory (HBM) for GPUs. SK Hynix dominates HBM, which is critical for AI models.

Samsung: Considered the “Intel of the space,” but potentially more bureaucratic and slow-moving.

Micron: Based in the US, but may face restrictions due to US export and geopolitical issues.

Q8: Which sectors of AI are the next investment waves?

The panel suggests that any investment in 2-dimensional AI (text, voice, video, code) is considered “done” due to the high entrance rate of capital. The next wave of investment is in 3-dimensional AI, specifically biology and robotics. These domains are valuable because they lack a dense repository of data to train upon, making data scarce.

Q9: What established scale players still hold the greatest advantage?

Panelists strongly recommend staying invested in scale players, arguing that the idea that “elephants can’t dance” has been disproven over the last 15 years.

NVIDIA and OpenAI are highlighted for having massive scale advantages.

If forced to invest in only two stocks, Brad Gerstner would choose NVIDIA and OpenAI, believing ChatGPT is the winner in consumer AI, which will be the biggest market in the next 5 to 10 years.

Quick Picks and Wrong Side of Change

Q10: What companies are favored in the lightning round matchups?

Q11: Which assets are considered on the “wrong side of change”?

Josh Wolfe identifies two baskets that he would personally be short:

Quantum companies: Described as “quantum flibbidy-doodled”. These companies often have low revenue ($10M to $50M) but high market caps (around $5B). All promised capabilities (like molecular modeling and unbreakable encryption) can currently be achieved with modern compute and GPUs.

Modular nuclear reactor companies: While nuclear power is seen as the future (large-scale fission, rebranded as elemental energy), these modular companies are expected to be “disasters”.

Q12: What business models are threatened by AI agents?

The business model of existing internet companies is under pressure because AI’s objective is the opposite of Google’s original objective.

Google’s objective was to get the user to ask a question and then charge a fee to send them to sites (like Booking.com or Amazon).

ChatGPT’s objective is to give the user the answer directly.

Intermediaries like Expedia and Booking.com that collect a 15% or 20% toll are threatened when consumers rely on a personal assistant agent (”super agent”) to book things without specifying the site. Winners in the existing app ecosystem are suspect.

AI and Societal Impact

Q13: How is AI displacement and wealth disparity being addressed?

Brad Gerstner noted that in the age of AI, there will undoubtedly be dislocation and displacement. To overcome these challenges and prevent the country from moving toward socialism, the goal is to double down on capitalism by getting everyone into the system.

The Invest America Act was passed into law this year to address this:

Starting in 2026, every child born in America will receive a prosperity account at birth.

This is a 401k from birth, initially seeded with $1,000 in the S&P 500.

This aims to ensure that 60% of people who currently lack compounding assets feel like they are “on Team America”.

Analogy: The current AI investment phase is like a massive gold rush, but instead of focusing on the surface-level gold (the applications), the most confident investors are betting heavily on the companies that sell the specialized mining equipment (the semiconductors and memory) that everyone needs, regardless of which application wins the race.

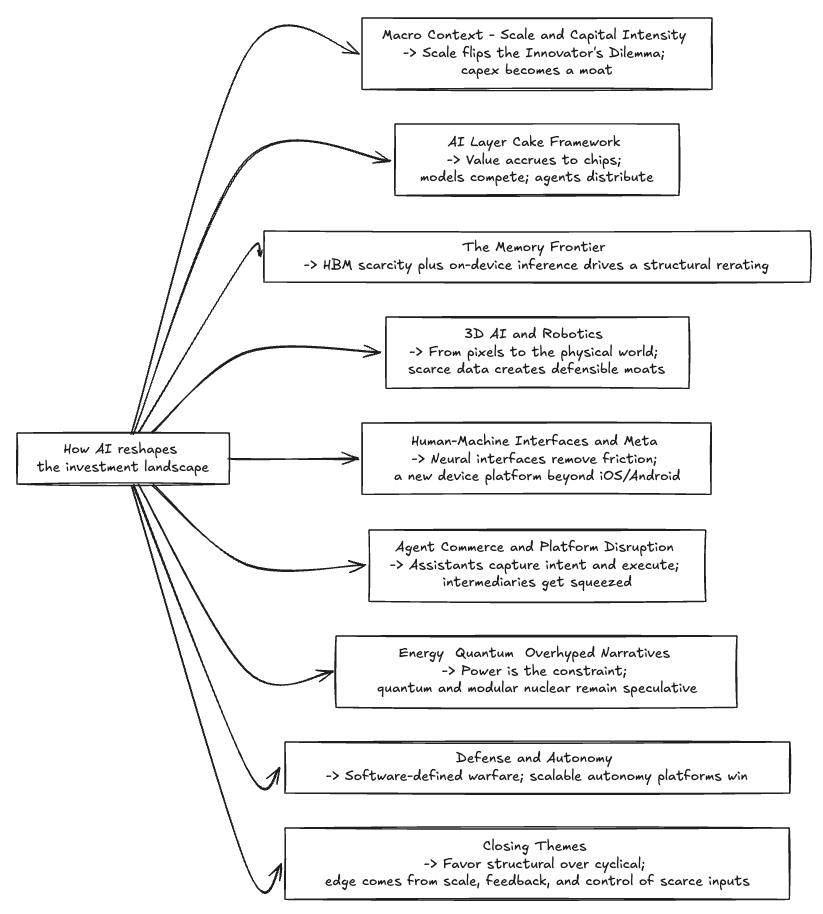

Mind Map

I. AI Investor Panel: Overview and Context

A. Central Theme

Exploration of how artificial intelligence reshapes the investment landscape.

Focus on infrastructure, scale, value capture, and emerging frontiers like memory, robotics, and human–machine interfaces.

II. Macro Context: Scale and Capital Intensity

A. AI CapEx as Economic Engine

AI capital spending adds roughly 1% to U.S. GDP growth.

Private sector driving a $3–4 trillion compute buildout over 4–5 years—about 10× the Manhattan Project.

B. Inversion of the Innovator’s Dilemma

Scale is now an advantage, not a liability.

“Elephants can dance”: large incumbents like NVIDIA, Microsoft, and Meta leverage capital, data, and feedback loops to accelerate innovation.

Immediate feedback from massive user bases turns corporate size into a compounding edge.

III. The AI Layer Cake Framework

A. Layer 1 – Semiconductors (Infrastructure)

Foundation of value creation; hardware dominates economic rent.

Companies: NVIDIA, AMD, Broadcom.

B. Layer 2 – Models (Foundation Models)

LLMs such as OpenAI, Anthropic, Gemini form the middle tier.

Competition split: OpenAI (consumer) vs. Anthropic (enterprise).

C. Layer 3 – Applications and Agents

Transition from static apps to dynamic agent ecosystems.

“Everything will be rebuilt” around interaction with super-agents (Brad Gerstner).

D. Layer 4 – Value Capture and Beneficiaries

Need to map technical bottlenecks (compute, memory, data) to business models.

Structural advantage shifts toward those controlling scarce inputs or distribution.

IV. The Memory Frontier

A. Structural Rerating Argument

Memory’s share of system cost rises each generation.

High-bandwidth memory (HBM) supply constrained; pricing power increasing.

“Your downside is actually going down as we move into the future.” — Andrew Homan

B. On-Device Inference Thesis

“I’m convinced that 50% of your inference will be on device.” — Josh Wolfe

On-device AI reduces dependence on data centers; flash and NAND become strategic.

Key beneficiaries: SK Hynix, Samsung, Micron.

C. Investment Implication

Memory transitions from cyclical to structural profit pool.

Parallel demand curves: cloud training + edge inference.

V. 3D AI and Robotics

A. Next Frontier Beyond Text and Images

“Anything in two-dimensional AI is done… The next wave is three-dimensional AI: biology and robotics.” — Josh Wolfe

Data scarcity in physical domains (movement, manipulation, sensing) makes these markets defensible.

B. Early-Stage Opportunity

Robotics, bioengineering, and synthetic data platforms will define the next investment cycle.

VI. Human–Machine Interfaces and Meta’s Bet

A. From Screens to Sensing

“Typing is so yesterday.” Interaction shifting from keyboards to neural inputs and gestures.

Focus on removing friction in how data enters and exits AI systems.

B. Wolfe on Meta’s Vision

“Zuck doesn’t want to be remembered for reinventing advertising… but for reinventing hardware and the human-computer interaction.”

Meta’s Control Labs acquisition → wrist-worn neural bands enabling “life-cording”—continuous, passive data capture.

Goal: bypass iOS and Android; create new device platforms for personalized AI.

VII. Agent Commerce and Platform Disruption

A. The End of Search Toll Collectors

Brad Gerstner: “Google’s objective was to send you away. ChatGPT’s objective is to keep you inside and give you the answer.”

Transition from search-driven traffic to agent-executed transactions.

B. Consequences

Pressure on intermediaries (Expedia, Booking).

Direct brand-to-agent commerce reshapes digital distribution.

VIII. Energy, Quantum, and Overhyped Narratives

A. Energy as Bottleneck

AI data center expansion constrained by energy availability.

B. Wolfe’s Skepticism on Quantum and Modular Nuclear

“I’d be short the basket of all the quantum companies… They make absolutely no sense.”

Prefers large-scale fission over speculative modular startups.

IX. Defense and Autonomy

A. Anduril as Archetype

“AI and autonomy is the future of defense… a single operator controlling thousands of systems.” — Josh Wolfe

Example of AI-native industrial transformation with scalable, software-defined hardware.

X. Closing Themes

A. Common Investment Threads

Structural over cyclical plays.

Scale, feedback, and control of scarce inputs define enduring edge.

B. Shared Long Positions

NVIDIA, OpenAI, SK Hynix, Meta, Microsoft, Google, Anduril.

C. Meta-Insight

AI is no longer just a software story; it is an infrastructure revolution spanning compute, memory, energy, and human interfaces—the new architecture of value creation.

Disclaimer: The information contained in this newsletter is intended for educational purposes only and should not be construed as financial advice. Please consult with a qualified financial advisor before making any investment decisions. Additionally, please note that we at AInvestor may or may not have a position in any of the companies mentioned herein. This is not a recommendation to buy or sell any security. The information contained herein is presented in good faith on a best efforts basis

Hey, great read as always. This builds on your previous AI pieces, making me wonder how fast this transformations will unfold.