Building and Maintaining an Edge: AI's Role with Michael Mauboussin

Michael Mauboussin breaks down how winning investors may use AI to eliminate decision noise, systematically exploit behavioral biases, and scale their edge across more opportunities

"Maybe AI is that sort of glue that brings these two communities together—the quants and the discretionary people—or takes the greatest hits of both these communities. And I think that's literally what the center books are doing." - Michael Mauboussin, Head of Consilient Research at Counterpoint Global

Michael Mauboussin’s legendary career spans Wall Street, academia, and the science of complex systems. He was Chief Investment Strategist at Legg Mason Capital Management, partnering with the iconic Bill Miller during his record-breaking streak of beating the S&P 500. Previously, he served as Chief Investment Strategist and Head of Global Financial Strategies at Credit Suisse. Today, Michael leads Consilient Research at Morgan Stanley’s Counterpoint Global and has spent three decades as a beloved adjunct professor at Columbia Business School. Along the way, he’s chaired the Santa Fe Institute—the world’s hub for complexity theory—written investing classics like More Than You Know and The Success Equation, and reshaped how we think about behavioral finance, market expectations, and the role of luck versus skill.

I first met Michael thirty years ago, though most of our conversations have probably been at hockey rinks and coffee shops. Every time, my head spins. He’s that rare thinker who’s both brilliant and relentlessly practical. Which is why I’m thrilled he’s kicking off our investor series on how top minds are using AI to drive returns.

Click here for FAQs and a Mind Map summaries of the key concepts from our interview with Michael.

In this conversation, you’ll learn:

How to define and articulate a true investment edge using Michael Mauboussin’s BAIT framework

Why AI’s greatest potential may be bridging quantitative and discretionary investing approaches

How AI can simulate market views and audit investment decisions to mitigate human “noise”

Why documenting investment decisions is crucial and how AI provides honest feedback on decision processes

The significant learning challenge AI creates and how to structure analyst training around it

How AI can be instrumental in “sizing” to effectively monetize an investor’s edge

How to leverage AI for base rate analyses and premortems to improve decision quality

Why distinguishing between systematic and judgment-based tasks is crucial for optimization

Some takeaways:

An investor’s edge implies a belief different from market pricing that is expected to materialize with positive expected value. This means actively seeking situations where your assessment diverges from current market consensus, and you expect that divergence to resolve in your favor. Before investing, explicitly document why you think you have a behavioral, analytical, informational, or technical advantage.

AI can serve as the bridge between quants and discretionary investors, taking the “greatest hits” from both camps. These communities often operate separately, but AI can function as the “glue” by blending systematic rigor with fundamental insights, similar to how multi-strat center books systematically extract alpha from discretionary pods.

Noise and bias can be fought by simulating a “wisdom of crowds” with AI. Handing the same case to different human analysts yields wildly different answers—scaling this approach is inefficient. AI can create agent personas (Seth Klarman, Warren Buffett) that analyze ideas from multiple perspectives, quickly surfacing counterarguments and reducing randomness in assessments.

The model of an investor is often better than the investor themselves, and AI can audit the “slippage.” Investors frequently deviate from their own well-defined processes, costing 100 to 150 basis points of performance annually. AI can codify your ideal process and audit actual decisions against it, ensuring adherence to predefined methodologies and identifying behavioral deviations.

AI creates a critical learning challenge that requires new training approaches. To effectively use AI tools, one needs pre-existing knowledge to judge output quality. Future training should make analysts responsible for quality control of AI outputs, shifting focus from tedious data gathering to critical thinking about why results are good or bad.

Systematically monetizing an edge through proper sizing represents a major opportunity. Even sophisticated investors often lack systematic approaches to position sizing. AI can recommend optimal portfolio sizing based on expected value, volatility, and correlation, acting as a “co-pilot” that guides learning and improvement over time.

Introduction and Background

Rob Marsh: Welcome, everybody, to AInvestor. I am Rob Marsh, and today we’ll be diving into AI’s role in identifying, building, and sustaining an investor edge. Today, I’m joined by Michael Mauboussin, Head of Consilient Research at Counterpoint Global with Morgan Stanley Investment Management.

Michael, I think our paths first crossed in the mid-'90s when I was doing a tour with the Raptor group up in Boston for Tudor when Jim Pallotta joined. And it’s gone on from there as we’ve both progressed throughout our careers.

To start, I want to begin where your good friend Patrick O’Shaughnessy typically wraps up his interviews where he asks what’s the kindest thing anyone’s done for you? In that spirit, I’d just like to thank you for joining today. You’re too kind to be doing this. Thank you.

Michael Mauboussin: Well, Rob, it’s my thrill to be with you. I’ve always thoroughly enjoyed our conversations over the last quarter-century—and it’s shocking to say that it’s that long, or maybe even a little longer. I never fail to learn from our conversations. And so it’s a real pleasure to be with you to talk through some of these topics.

Rob Marsh: Well, again, thank you. Now, when I first asked you to be part of this, you were very humbled about the AI part of the conversation. And I have three counters to that.

One, you know a lot more than you let on. You also have a pretty deep bench within the family that supports you. Two, we’re not going to get very technical today. That’s not really the audience or what we’re looking to do. And third, even before AI is a consideration, you have to think about what problem you’re trying to solve, how you’re trying to solve it, and what the risks are. From my seat, you’ve spent a career helping the highest-caliber investors walk through that, so you can set your modesty aside for today.

Michael Mauboussin: Well, I will react to that, Rob. You said my kids are deep into AI, and I’ve learned an enormous amount from them. I ask them a lot of questions. And this is an issue that’s constantly on my mind because I think—and you and I share this view—that the world is going to change a lot in the next three, five, 10, 20 years, and it’s really incumbent on us to think through how that might happen.

And the interesting thing always to think about in the world of investing is what is immutable. So what doesn’t change over time, right? Buying things for less than they’re worth and selling them for more or whatever it is. And what is mutable? We have to be flexible about the mutable stuff, including things that allow us to do our process more efficiently or better.

It’s not false modesty. I don’t really know what I’m talking about, but it’s something I’m very attuned to, and I think it’s something we should all have a conversation about. As we go through this, a couple of things will pop up, but it’s such early days for me. I also feel a little bit generational, that I’m maybe a little too entrenched, so I have to think about opening up to make sure that I’m seeing everything for what it is.

Rob Marsh: As you say, generational wisdom goes a long way, especially when it’s wrapped in your humility and flexibility. So, one of the things I want to do as we get started is to provide a kind of rough outline for the conversation today. I think, first, we should start at the beginning, perhaps define the whole concept of an edge from an investor’s perspective. Why is it important? You’ve written a book, The Success Equation, which applies to this directly. Then, talking more explicitly about edge and framing it for the investor. You have your BAIT framework, which you’ve introduced before and written about, and then perhaps your experience with some of the investors you’ve worked with, stories around how these concepts have been put into action effectively. And from there, we could get into where AI can supercharge both the offense-oriented aspects of a process and the defensive aspects—which I would say is probably more applicable and helpful to more people than the right-hand tail.

Michael Mauboussin: Yeah. No, that’s awesome. Let’s jump in.

Defining Investment Edge

Rob Marsh: Okay. So what is an edge?

Michael Mauboussin: Well, I think the place I’d start is just an acknowledgment that if you’re an active, discretionary manager, it’s an extraordinary act of hubris. Because what we know—and these data have been around for literally decades, going back a century—is that active managers really struggle to beat relatively straightforward benchmarks, for example, the S&P 500.

So, an edge would be a situation where you have an investment with a positive expected value. It means you believe something that is different from what is priced into the market and that your belief is going to come to pass, or the market’s going to come to accept your view. And so, it’s extremely difficult. One of the reasons—and you pointed this out with luck and skill—is that even if the markets were perfectly efficient, just pretend for the sake of argument, and you had hundreds of thousands of people participating, you would have some that beat the market and some that did much worse.

On average, they’d be the market, to state the obvious, but you would attribute the outperformance and underperformance to either good or bad luck, by definition, in our little case study.

Rob Marsh: Yeah.

Michael Mauboussin: And when you realize that markets are pretty efficient—let’s just say that, we can qualify it—you realize that luck plays an enormous role in the results we see. And so our challenge, when we think about edge, is to be very disciplined about homing in on what is skill, and that’s going to be a function of a process. And then how do we find that, how do we identify and exercise that systematically.

So edge is everything. Let me just say one other thing about this that I think helps make it easier for people to recognize. Blackjack is very different than markets, but blackjack is a good example. Ed Thorp, who himself was a great investor, wrote a book back in the 1960s called Beat the Dealer about card counting. The idea is that if you play standard blackjack strategy, it’s about a half percent house edge. So, you’ll lose money over time, but you have a few drinks, have some fun, and that’s all good. But if you’re card counting effectively, you can swing those odds in your favor to anywhere between 1 percent up to 3 or 4 percent. In that case, you have a positive edge. If you can bet more and press your bets a little bit, you have a positive expectation. That’s just a really nice, vivid way of saying that most times you don’t have an edge, but from time to time, things come along that allow you to have some sort of positive expected value, and you want to be in a position to take advantage of it when those things show up.

So it’s really—it sounds very fundamental to say you need to think about this every day, but it’s always asking the question, “Why do I think I know something that everybody else doesn’t know? Why do I think this transaction has an expected value when the person on the other side of my trade doesn’t seem to think so?”

Rob Marsh: But as you’re talking through that, I recognize that as an experience. I’m even taken back to sitting on the back porch with my dad in Southern California when I was in my early 20s. I was saying I didn’t want to be an accountant and wanted to get into this investment thing, and he asked me, “Why you?”

Michael Mauboussin: It’s a fair question. Every morning when you wake up and look in the mirror, that should be the question you ask. That’s right.

Rob Marsh: Exactly. So with that background, maybe we could walk into your framework on how you’ve come to really define it and come up with a taxonomy, a schema, a process.

The BAIT Framework

Michael Mauboussin: Yeah. And look, I’ve drawn here from many, many other people, so to be clear, I don’t want to give myself much credit for this. I did organize it in the acronym BAIT. The idea is you have to have good bait to go catch the big fish. So I’ll give myself credit for that, but that’s about it. But Rob, just as we go through this, as you said, I think it’s not only important to have or pursue an edge, but it’s also very important to understand why you think market inefficiency or opportunities are there. Like, why is market efficiency being compromised or why are the opportunities there?

Rob Marsh: Yep.

Michael Mauboussin: And being quite overt about it. Ideally, writing it down and saying, “Here’s why I think I have the best of this particular situation.” So we end up using four different areas. And by the way, in some ways, you could say everything’s behavioral, but we’ve been talking about BAIT.

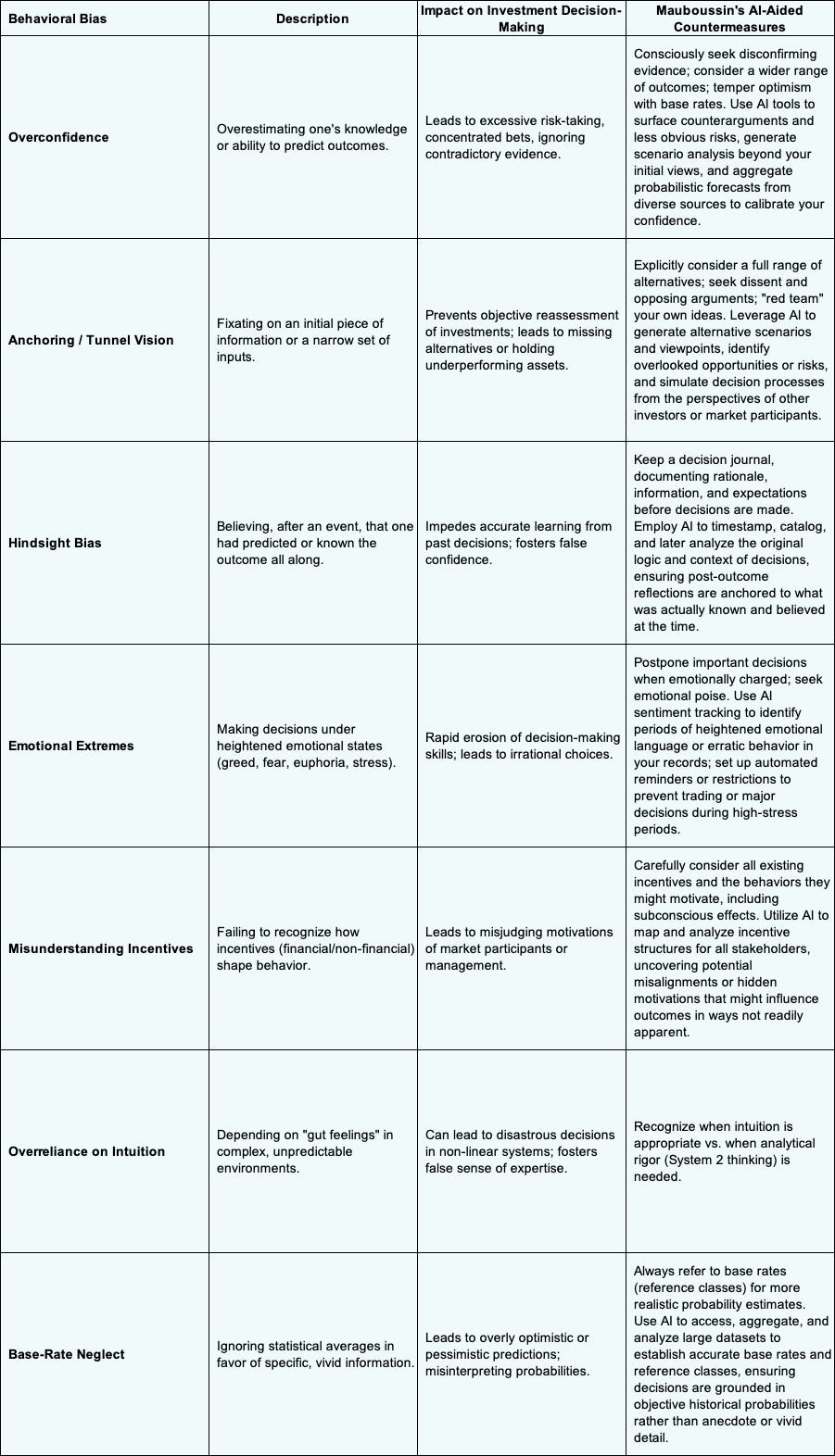

So, the B of BAIT is behavioral. The idea there is that humans are humans. When you read these wonderful histories of markets going back hundreds of years and you read what people were doing, it seems extremely familiar. It’s the exact same sets of emotions, the exact same things people go through today. Of course, we have all sorts of fancy technology at our fingertips, and people didn’t have that hundreds of years ago, but the emotions are absolutely consistent and identifiable. So, we can get into the details of behavioral, but that’s the first one. And as I said, in some ways, everything is behavioral.

The second one is analytical, which is the idea that you and I have the same information but we can analyze it with different degrees of skill. Probably the best, easiest illustration of that is thinking about institutions versus individuals. There’s a really large body of academic research that shows when institutions and individuals compete with one another, it is the institutions that win.

The example there is probably something like tennis. You go out and play with a professional tennis player—same racket, same court, same equipment, and so forth, but they’re just more skillful than you are in playing the game.

The third one is informational, and that’s literally having better information than other folks. That is tricky. Obviously, you would like to have information that other people don’t have, but you need to do all of this legally. People get in trouble skirting the edges of that. So there are two aspects that might be interesting.

One is complexity. There can be situations where information is essentially embodied in other things, and if you can extract it effectively, you can gain some insight. For instance, there’s a lot of interesting work on supply chains. Something happens at company A; what does that mean for company C downstream, for instance? Can you connect those dots in a way that others either are slow to do or can’t do well?

The other one is straight-up attention. It sounds silly, but our lives are dictated by what we pay attention to. For whatever reason, I do think markets feel like they have a kind of collective spotlight on what is important at the moment. And that could be for the economy overall, an industry, or a very specific company. So what you pay attention to is really important. I will just say this is me riffing, this is commentary. I don’t know that this is completely supportable, but I found it fascinating when we had, what was it, Nvidia Monday, in January, when the stock went down 15 percent—it was like a $600 billion market value loss in one day. And it was on the back of this information about DeepSeek, this Chinese model that came out. And what was interesting—you were mentioning my own family—I’d had conversations with my kids literally about the two technical papers that DeepSeek published, and one came out in December and one came out in January, like a week or two before Nvidia Monday. So there was nothing technical that was not in the complete public domain. The only thing that I saw that was different is that DeepSeek had a lot of downloads on the app store over the weekend, but literally in terms of what they were doing, the technical aspects of it, basically all was in the public domain. And it was just like somehow the market one day woke up and said, “Oh my god, this is a big deal.” And by the way, the stock has since recovered and so on and so forth. But that’s a fascinating example of attention.

Rob Marsh: I was befuddled as well because I’m looking at it and I’m kind of like…

Michael Mauboussin: Right, exactly. And the last one is technical, which I find fascinating. This is basically asking, are there people buying or selling for non-fundamental reasons—or for reasons where they don’t want to buy or sell? And as a consequence, I could take the other side of that trade as a liquidity provider because they’re being induced to do something. So that’s the basic setup. And again, as I say, before you make an investment, it is really ideal to write down, “Here’s what I think is going on, here’s why I think I have the best of this particular investment situation,” and to see whether your investment works out or does not, if you’re right for the right reasons or right for the wrong reasons or wrong for the right reasons and so on.

Now the other thing to say, and I’m sure everybody listening fully appreciates this, but everything you do in investing is probabilistic. So when we say positive expected value, we’re not saying we’re going to make money with 100 percent certainty. What we’re saying is if we make many of these types of investments over time, we are going to do well and generate excess returns, understanding that for any particular investment, even with the best work done, best process, we are going to lose from time to time. And that’s just part of the game. So I just want to be clear that this is not saying we’re going to get anybody to be perfect. It’s about a probabilistic assessment of things.

Mauboussin's BAIT Framework for Investment Edge

AI Applications in the BAIT Framework

Rob Marsh: Exactly. Well, thank you. It’s funny, as you were talking, I’m writing down notes on the side, and I printed out a table. We were talking about this before we recorded, where I asked Google Gemini for this. Their deep research said, “Review all of Michael’s books, writings, recordings, whatever you can find,” and gave context about what we were talking about, and it had to generate a report. One of the artifacts in that report was a table of your framework, and it has the edge type, the description, the source of advantage, and some key characteristics around it. And I’m just scribbling now as we were talking, like AI helps in this area or element or that. So…

Michael Mauboussin: Well, we should go. If you’re game for it, we could go through some of these, and then we can go back and forth, Rob. So if you have something on your list that I’ve not written down. So behavioral, just to go back to behavioral, I mean, I think there are two or three big themes there.

One is the basic idea of overextrapolation. This is again very well documented in academic research: investors tend to overextrapolate. So things are going well, they think they’re going on well forever. If they’re doing poorly, they’re going to go poorly forever. And they don’t take into consideration—I don’t even want to call it regression toward the mean—but basically that things don’t grow to the sky.

And then related to that would be something like sentiment. So can we measure sentiment? Again, too much optimism tends to be bearish, too much pessimism tends to be bullish. And then the last thing is the wisdom of crowds, and this is the one I’m most interested in, and I think this is where we have some opportunities to use AI.

The wisdom of crowds basically says when you have a collective of people under certain conditions, they’re going to come up essentially with the right price. And the key is the conditions. And the three conditions that are properly mentioned are diversity or heterogeneity. We need our agents, our investors, to be different: long-term oriented, short-term oriented, technical, fundamental, whatever it is, throw them in the mix. That’s good. The second is an aggregation mechanism, which of course markets do beautifully: double auction markets. And then the third thing is incentives: if you’re right, you make more money, and if you’re wrong, you lose money and you go away. So it means the smart people are the ones that continue to play the game.

So here’s where it becomes really interesting: can we start to use some AI tools to measure some of these things more effectively? As we know, natural language processing is a really powerful tool for things like sentiment and probably to some degree diversity—measuring diversity, although that diversity needs to be manifested in market behavior.

So it’s almost the decision rules of the investors, but those are great examples. Overextrapolation would be another one where we could start to use these tools and just say, by applying things like base rates, so understanding historical patterns for example, sales growth rates or company profit patterns, you could really say to an LLM, “Hey, here’s what’s happened for growth rates. People seem to be thinking this one company’s going to grow to the moon. What do you think is the probabilistic assessment of something like that?” Right? So, that might be a way for us to very quickly—because it’s going to be able to digest the base rate data very effectively, it’s going to be able to look at a lot of different situations and, I think, shine a spotlight on some of the things that might be of interest. Is that—would you have anything you wrote down that would add to that?

Rob Marsh: Yeah. And just to riff off of that, you snuck in the word “agent” there, which has become very commonly used in the thought application within AI. And you’re using it a little differently, but you’re talking about AI using it to measure, and I scribbled down ‘measure versus proxy,’ because one of the things that can be done is to create agents that have a certain framework and direction that can proxy that independence, go surface the information, and present it to you in such a way that you could simulate, if you will, the public markets and a public discussion with some reliability that you have independence of thought.

The Noise Problem and AI Solutions

Michael Mauboussin: So, Rob, let me just tell you one quick story on this which I think is fascinating. There’s a very interesting book, I think it’s an incomplete book, but a very interesting book about the topic of noise, by Danny Kahneman, Cass Sunstein, and Olivier Sibony. They’re great guys, all of them. And the idea of noise is that when you give people judgments to make, whether that’s many people making a judgment on the same fact pattern or you making the same decision over time, there’s a lot of noise, a lot of randomness.

For instance, in the book, they describe insurance adjusters. You have to settle this particular insurance case. They give a folder to 50 different insurance adjusters at the same firm, right? So, they’re trained the same way, they’re supposed to be doing the same thing, and they come up with these wildly different answers.

So, here’s an example. I did—it’s actually in the book, although it’s all anonymous, mercifully anonymous—but I did this at an investment firm where I worked. I developed a single page, and folks were up for this. It was actually a real company, but I hid—I scaled all the numbers so you couldn’t identify it just by looking at the numbers. So I knew the stock price. And I literally asked people, “Write down the price at which you would be indifferent between buying and selling.” So that’s kind of fair value, whatever it is. Now, the stock price was $25, and the lowest number—we had about 30, 35 people participate—the lowest number was $5 and the highest number was $130, and the average of everybody was $25.

Right? So, the market itself worked. But here’s the thing. Now, you work in an investment organization and you hand that folder to analyst A, and she comes up with $5. You hand it to analyst C and he comes up with $130. Like, what are you going to do with that? So, very much to your point, they say, “Well, how do you mitigate noise?” Well, one is if there’s an algorithm—we can talk about that, too. If there’s an algorithm, make sure that you write it down and hew to it. That’s great. But the second thing they said is you need diversity. Do exactly that. Have 30 analysts cover the same stock. Why do we not do that? Wildly expensive. Wildly inefficient.

But you just described a process that absolutely allows you to achieve that objective in a really fascinating fashion, very quickly, and I think would replicate the vast majority of what we’d be after.

Right? So let’s look at XYZ. Your profile is you be Seth Klarman, you be Warren Buffett, you be Peter Lynch, whoever it is. Let them go at it with the tools that the LLM thinks those people would use. And you create that wisdom of crowds within. By the way, if you have a view on the stock, which you very well may, it will automatically give you counter-views to your own take based on the research and thought process of someone you likely respect. So that, I think, is really cool, and we’re working on some of that stuff now in our own organization. It’s early days, but it’s super fun and fascinating. You have to be thoughtful about prompting, to be clear on that, but it’s a really exciting area to explore.

Pod Shops and Center Books

Rob Marsh: So, Michael, at the risk of going off on a tangent, as you were describing the 30 analysts and how expensive it would be, who’s doing that right now are the pod shops. And it helps speak to why the center books—or for the non-initiated, ‘center book’ being the aggregated portfolio based on all the different independent inputs—have been so successful. But that’s just an aside.

Michael Mauboussin: But no, Rob, maybe we should stay there for just a second because A, I agree with you. B, by the way, the pod shops charge very high fees, but they deliver above and beyond those fees. So they’re extraordinarily expensive from the point of view of fees, but they’re actually delivering their fees and then some. But the other thing that I think is really important, and this ties into our AI conversation, is the one thing you want to think about is where in my process is judgment important and where can I be systematic? I think what these center books do is allow them to extract alpha or excess returns from all these different pods, but they’re doing it in a systematic fashion. And so that is a really key thing.

So, interestingly, why can’t the individual pods do that? Well, they’re doing a lot of good stuff, but they’re not taking the full systematic approach that the center book is allowed to take. So it’s really interesting. That’s the other thing I would just say when we think about our investment process—from identifying mispriced securities to how we analyze, to how we build portfolios, to how we hold them to monetize that edge—where along that chain can we be systematic, even if we’re discretionary investors? Where can we be systematic versus where are we going to be discretionary? It’s a super fascinating question. And that sort of goes back to the systematic community, the quants versus the discretionary guys. They still are kind of two different tribes, right? They don’t really talk to each other, but the quants know there’s much they can learn from the discretionary people, and the discretionary people know there’s a lot they can learn from the quants. And maybe AI is that sort of glue that brings these two communities together or takes the greatest hits of both these communities. And I think that’s literally what the center books are doing. That’s essentially a manifestation of that. But can we roll that out to other organizations in a fuller way?

Decision Documentation and Process Auditing

Rob Marsh: Yes, absolutely. I think connecting dots perhaps between the proxy, or using AI to proxy many different views or even understand actual analysts’ and PMs’ and traders’ views, with something you noted earlier is the ideal of being able to write down, track, and audit the decision-making process, and where can AI be used for that? Where can its core competencies be applied?

And my mind goes twofold. One is just the ability, as analysts are doing models and recording phone calls and meeting notes and everything else, and it all goes into your reservoir, which then can get processed. You get the pattern matching, the semantic understanding, the fuzzy logic capabilities, all of that processing it. So that’s one vector. The other—and I was having a conversation a couple of weeks ago with a friend and former colleague from the Tudor days who, by the way, is a big fan of yours, extremely process-oriented—is using AI to mimic him, not so he or his team doesn’t have to do the work, but almost as an audit or a control mechanism. Is he following his own well-defined, predefined process?

Michael Mauboussin: Yeah. I love all that. By the way, there’s a very famous paper from Lewis Goldberg in psychology, and the basic punchline is the model of man is better than man himself, or the model of a person is better than the person himself. So we’ll gender-neutralize that. But the idea is, I come to you, Rob, and I say, “How do you want to, what is your process?” And you tell me, and I write it down. And then I observe, “Does Rob follow what he says he wants to do?” And the answer is there’s always slippage between those things. And we know that, by the way, we even know that in the investment management business. There are examples of firms that help money managers with position sizing in their portfolios, and they start off by asking the managers, “How do you want to do this? What are your constraints?” and so on. They write it all down, and then the investor inputs all this data into the software, which allows the company to track the difference between what they’re actually doing and what they said they wanted to do. They can see that slippage, and it comes out to somewhere around 100 to 150 basis points of performance per year, which is not insignificant. So that’s the first thing I would just say, that I think you’re exactly right, and just the discipline of hewing to what we said we’re going to do.

The Learning Challenge with AI

The second thing, Rob, that the reaction I have, and this is maybe my overarching struggle with AI and its application, and you were talking about gathering all this information. My biggest core struggle is this chicken-and-the-egg problem. You’ve been doing this for a long time. You’re a very thoughtful guy. You kind of know what works and what doesn’t work. So when you put something into an AI program and you get an output, you have a very good filter to judge whether it’s of good or poor quality. You have a nose and a sense of this because you’ve been through the process yourself. Now, you were slower perhaps, and you didn’t have the reach that the machine has, but you’d been through the process.

You know, I built financial models when I was an analyst, so I know how to build a model, and I know there are certain nuances in these things. Even when I was an analyst, I never let my junior analyst ever touch my models because I felt it was so fundamental that I understood what was going on. I would go through this line item, something wouldn’t make sense, and it was only—I had to see it for myself and be in the middle of it. So there’s this idea of pre-knowledge that’s necessary to use these tools effectively.

That’s why I say I might be just the grouchy old guy or generational, which is I think that if you’ve learned to write and you’ve learned to model, then using these tools is fabulous, because you can judge very quickly. You can take all the good stuff and leave aside or figure out the bad stuff.

I had breakfast with a friend recently, and he was mentioning one of his workout partners is a professor of physics at a university in California. She was saying that for her physics students, they’ve never had higher grades on the problem sets and they’ve never had worse grades on the finals. The reason is that for the problem sets, they can apply AI. It’s an objective function, so there’s an answer. But for the finals, they’re sitting in a room with blue books, and they’re not learning. So the key is to learn.

Getting the right answer is obviously important in a lot of things, but when there’s a lot of judgment, it’s not always just getting the right answer. The process of getting to the right answer is really important. So that’s the second thing I’ll say is just this—this is what I struggle with, this need to know what you’re doing in order to use these tools effectively. And I worry that some people are going to jump the gun on that and not know what they’re doing.

Decision Documentation and Feedback

The third thing I want to say, and you also touched on this, which was fabulous, is really documenting your decisions. And I think this is where AI can be incredibly powerful. So leaving aside gathering information, as you said, pulling things from different areas, that’s all powerful, but even documenting decisions. Now, the reality is most people don’t do it. It requires some discipline, but many people just don’t. But if you start doing this every time you make an investment decision—and by the way, a non-decision is a decision—you just document it. You can now speak into your device. It’s not like you have to spend tons of time doing this. You can speak into your device for a minute to summarize where you are and just set it aside. And then periodically go back and let the AI comb through those decision-making processes and then say, “Hey, let me just tell you, it seems like when this happens, you do that. When that happens, you do this. When you do this, this seems to work out well. When you have this, you say these things, it tends to not work out well.” Right? So, that’s an example of how it could probably reflect back to you in a very honest way feedback on your decision process that may help you.

And I think one of the reasons people don’t document decisions is that it’s going to be embarrassing. You’re going to be wrong a lot, and you don’t want everybody in the world to know about it. But if you’re doing it for yourself, you’re just trying to improve. If you’re doing it for yourself, this could be an invaluable tool for people.

Rob Marsh: Yeah. It’s funny, I was starting to shake my head a little bit side to side when you say people not wanting to be wrong. In many respects, the industry self-selects out of that. You’re not going to be around very long if you’re that fragile.

But I want to go back to where you’re talking about your concerns about learning, how you were talking about when you were an analyst, how you wouldn’t let your juniors do it, and that there’s a visceral knowledge that you develop over the years by going through the tedium, like actually going, finding the data, understanding why the data is not what you think it is, or your vendor’s changed the format. And there’s so much knowledge that builds up over time that you can’t even articulate how it manifests, but you see something on a big spreadsheet and you know something’s wrong. And how do you get through the tedium part of experience separate from the experience of being wrong?

Michael Mauboussin: What’s the answer, Rob? What is the answer to that? Seriously, I mean, that’s my biggest struggle. For example, I was visiting with a very successful multi-strat firm and they said, “We try to have our analysts cover around 40 stocks, not more than that, and we want them to bump that up with AI to 60 stocks.” I’m not sure exactly I see that path, but I see the logic of it because they’re doing it now. They are in the trenches. They are doing the tedious stuff. And so I could see how AI could help them on the margins to extend their capabilities. But if someone didn’t know what they were doing, I’d be scared to death to allow them to try to use these technology tools. Now again, for gathering information—I just say how do I use it for my own research?

It’s extraordinary. And you can say to it, “Give me the primary research, give me the link to the primary research,” and it’s there or not. So that solves a lot of these things about making up papers. You can just say, “Give me the link to the actual paper.” It’ll do it for you.

Rob Marsh: Right.

Michael Mauboussin: And for that kind of stuff, it’s extraordinary. It’s led me, it’s unsurfaced stuff. I mean, I’m pretty good at Googling things, but I’ve learned a lot. It’s unsurfaced a lot of stuff that I never would have—it’s found documents that I would struggle to find on my own. And again, I’d say I’m probably above average at doing this because it’s a lot of what I do all day. So, it’s been really good for that kind of stuff. So I think this chicken and egg thing is something that we need to think a lot about, especially for young people.

By the way, you mentioned the tedium of doing all these things. The other thing I have to say is that in the olden days, we worked in offices together, and there was a lot of—the claim is something like 70 percent of knowledge is tacit knowledge, which is what you pick up on the job. You and I have a cup of coffee or we’re talking about the Yankees game or whatever it is. There’s information being conveyed that could be very helpful that is not overt. So, that’s another aspect that we’re—you know, I think companies are losing some of that as well, that was probably underestimated in terms of its importance.

Business Edge and Training Considerations

Rob Marsh: I want to bring it back to the topic of edge, because I think it’s actually critical and very important. Some of the other dimensions. So when we’re talking about behavioral, analytical, informational, and technical, that’s largely within the context of investing, trading decisions, buy, sell, hold, sizing, and the like.

If you’re running an investment business, there’s a business edge, a longer-term edge, which is what you’re talking about: the training, the people. And I guess if I were sitting in that seat again and was responsible for a team of analysts and whatnot, I would probably look to kind of flip it a little bit to where they’re responsible for doing the quality control checks on the output and not only saying, “This is good or bad,” and passing it along, but explaining why it’s good or bad. The tedium time is less about tracking down the numbers per se and more about writing a lot—because, again, writing is thinking. So you don’t want to obviate the need for analysts to write. And maybe work it that way. Ideally, instead of getting reps over 30 companies, maybe you do get 60 and you have more meaningful conversations. Or maybe you still cover the same 30, but you just do it in a much more nuanced way, coming at it from different angles.

So the analyst doesn’t have to spend all their time just getting the model populated and having the numbers cross-check. They could actually have time to do some of the inefficient thinking with an AI co-pilot, but also their human boss, whoever they’re reportingto. And I think that might be a way to bridge some of the concerns you have about the learning process while still getting the leverage and efficiency benefits.

Michael Mauboussin: Yeah, I think that’s really smart, Rob. And I think if I were in charge of a group of people doing an analytical process, I always like to say there are certain tools that are very helpful to help people make good decisions. By the way, we should qualify—I want to be clear, there are a lot of different investment approaches under the tent here.

Rob Marsh: Right.

Michael Mauboussin: I teach at Columbia Business School, I teach fundamental analysis, and we believe that the present value of future cash flows dictates stock prices. That’s not the only way to think about the world. There are a lot of other ways.

So I want to be clear about that. But let me just mention two tools that I think are really helpful for people to make good decisions, and I think AI could be extraordinarily helpful in both of these. One is base rates. This is something that Danny Kahneman and Amos Tversky wrote about extensively. It’s something that Phil Tetlock talks about a lot when he talks about superforecasters. It’s something that’s very much part of what we try to do. A base rate is saying instead of me doing analysis on XYZ company based on my information gathering and talking to management and so on, I’m going to simply ask about this company as an instance of a larger reference class.

Rob Marsh: Go on.

Michael Mauboussin: I’m going to say, what happened with other companies like this in the past, and where does my judgment fall within that distribution of outcomes? It’s a little bit of a reality check, often a sobriety check, candidly. But understanding base rates, which can maybe be done much more effectively with AI than we’ve ever been able to do before, I think could be really helpful.

The second is this idea of a premortem. This idea was developed by Gary Klein, but it’s a very popular idea. Danny Kahneman loved it, Dick Thaler loves it. And so it’s this idea of saying before I make an investment, let’s get a group of people together. And by the way, we can get rid of the group part of this by doing this. Let’s get people together, and let’s pretend we made the investment and it turned out to be a fiasco. Each of us then writes down why it was a fiasco. And then we share those and we say, “Okay, are any of these things that we wrote down likely to happen? And if so, maybe we should think twice about this investment.”

Again, you could have the AI do the premortem—again, rather than having anybody do it in a room, you could have the AI do it, as you said, perhaps with these different personas.

Rob Marsh: Michael, if I could jump in real quick. I think there’s an advantage perhaps. It’s not as threatening from a personal perspective. It’s one thing to do a premortem, and if you’re a junior and I’m asking—and I’m sitting in the room with Michael Mauboussin—I might be more reluctant to say anything. So, I think you could get more honest feedback.

Michael Mauboussin: That’s a great point. And by the way, there’s a guy named Roger Martin who’s written a lot about strategy, and he talks about this issue. He says when you’re making a decision at the end of the day. He’s like, “This decision is going to go forward.” He goes, “But”—and he’s like, “People don’t like pessimists.” He goes, “But you’re getting points for being clever about how things can go wrong.” So, it’s almost like you get clever points versus pessimist points. He’s like that reframing is really helpful.

And the second thing is it allows you to create essentially contingency plans. So, you say, “If this bad thing happens, then we will do X.” And so you’re not scrambling when the bad thing happens. You’ve already thought through what you’re going to do. So those are two examples. Do you have about a premortem in action and just how valuable it turned out to be?

A Premortem Success Story

Michael Mauboussin: At an investment firm, I was always involved with the investment committee process. I would sit in all the meetings, and from time to time, I was on the actual investment committee. So this is a particular investment where I was on the investment committee, one of five. Our team came in and pitched an idea, and it was literally three hours of going back and forth, going through their work. And by the way, these guys did a fantastic job. They were really well prepared, they knew their stuff, they had thought through a lot of different angles.

But what happened is that the head of that investment committee, had a particular point of view and was guiding the conversation in a particular direction. It kept coming back to this grooved path that had been set early on by the CIO, and we couldn’t get out of this grooved path.

At the end of it, these poor people have done tons of work, hours and hours. I just said we as an investment committee have to do a premortem. And we did one based on email, and it was absolutely remarkable that two or three things surfaced among our investment committee that I would say were the most important things to think about for that particular investment. And we had not covered them in three hours of discussion. It wasn’t like we were bombing through this thing superficially, and you just don’t cover things that are really relevant. And so that was a real eye-opener for me, that you could spend—it wasn’t like we were spending an hour bombing through something and we were missing—this was hours and hours of detailed discussion without what ended up being two or three really important issues that were under the surface and that required this mechanism to get them to the surface. Probably that would be one very vivid example in my mind.

Rob Marsh: Wow. Yeah. I’ve more than once wished I had gone through that process before making certain decisions.

Systematic vs. Judgment-Based Decisions

Rob Marsh: But I want to go back to something you mentioned earlier about where you can be systematic versus where you need judgment. And I think this is where the rubber meets the road in terms of how AI can be most effectively deployed. You talked about the multi-strat firms and how they’re able to extract alpha through their center books by being systematic about portfolio construction, even though the individual pods are making discretionary stock-picking decisions.

Michael Mauboussin: Yeah, exactly. And I think this gets to the heart of what we should all be thinking about as we contemplate how to use AI effectively. The overarching thing would be that we should be deep in this process of contemplating where we can hand things over and where we can add value, try to demarcate those two areas, and proceed as appropriate.

One example, you and I have talked about this over the years, but one area I find completely fascinating is sizing. I mentioned blackjack before, but when Ed Thorp actually went to Reno to play blackjack, it was a two-part system. Part one was edge through card counting, but part two was bet sizing based on his bankroll and his risk appetite. And they were both systematic. So, the question is, can we be more systematic in terms of sizing than we are today?

And I think the answer is absolutely yes. Most people, even very sophisticated investors, are not very systematic about how they size their positions. They might have some rules of thumb, but they’re not thinking about it in terms of expected value, volatility, correlation with the rest of their portfolio, and so on. That’s an area where I think AI could be extraordinarily helpful in helping people be more systematic.

Rob Marsh: I’m writing down some of these notes and it strikes me that we could have a whole another hour-long conversation just on identifying the features of what’s truly systematic versus what’s judgment.

Michael Mauboussin: I agree with that. I think as humans, we tend to overreach a little bit. One example, many investors love to think that they’re good at timing—market timing or sector timing or whatever it is. And the reality is that most people are not very good at timing. So that might be an area where you say, “You know what, I’m just going to be systematic about this. I’m going to dollar-cost average or I’m going to rebalance on a regular basis,” rather than trying to time things.

Pattern Recognition and AI

Rob Marsh: Well, pattern recognition—maybe our third hour. Because that is an area where again, you talk about—you very much have a deep fundamental perspective. I come from the macro world where my big opportunity was building pattern-based trading systems and so was able to have a career based on some variations of that. But…

Michael Mauboussin: But that’s the thing I would just want to underscore for people. I want to be clear that I am not anti-pattern recognition. I am anti-thinking you know where there’s a pattern where there is no pattern. So what you did, Rob, is you found legitimate patterns that allowed you to make money. That’s fantastic. The problem is that humans are pattern-seeking machines, and we see patterns everywhere, including where they don’t exist.

Rob Marsh: I might suggest my former boss’s success is more a testament than mine. But you raised a very good point. It’s not about choosing sides. These are not political or religious debates. And I think one of your profound gifts you’ve provided through your work over the years is providing the structure and the frameworks to identify when things are appropriate, where they should work, where they shouldn’t, and why not.

Michael Mauboussin: Yeah.

Rob Marsh: It’s kind of the first principles around all of that which is so important. So I think there’s a lot of work to do. But the one area where I feel most limited is on sizing. I actually think that’s an area where there’s a lot of opportunity. You mentioned before the center books and how there’s an alpha capture at these multi-strategy firms where they can figure out how to take the best ideas and put them together, and they obviously use leverage in that mix as well.

Michael Mauboussin: I just think there’s an enormous opportunity for a lot of investors to be smarter about how they monetize the edge that they find. And I look at sizing as being probably the area where there’s the most opportunity for most people to improve. You could imagine building a systematic approach to sizing that takes into account your conviction level, the expected volatility of the position, how it correlates with the rest of your portfolio, your overall risk budget, and so on. And then you could just compare what you’re doing to what the ideal would be. And then over time, if it works out well, you can obviously monitor the performance of both those portfolios, and to the degree to which the systematic portfolio does better, you can migrate toward it.

The Chess Program Analogy

I’m reminded, there’s this great story where they were looking at generations of chess players. And Magnus Carlsen obviously is one of the greatest of all time, and they were able to document, by running chess programs simultaneously with watching the players’ moves, those players that were trained with chess programs versus those that were not. And what they found is that the players who were trained with chess programs made moves that were much more similar to what the chess program would recommend, comparing how they move vis-a-vis the chess program.

So what would that be for us? How do we develop our version of the chess program that allows us to learn the right moves given the situation and then learn from that over time to get better and better? So to me, the magic wand would be the flight simulator, the chess program that would allow us to learn as we did our jobs.

Conclusion

Rob Marsh: Well, we’re running up against our time limit, but this has been absolutely fascinating. I think we’ve covered a lot of ground on how AI can enhance the investment process while being mindful of the challenges and limitations. The key seems to be finding that balance between leveraging AI’s capabilities and maintaining the human judgment and experience that’s so critical to successful investing.

Michael, thank you so much for taking the time to share your insights. This is going to be super exciting. We’ll see how all this unfolds. So, I really appreciate you taking the time and your thoughtful questions and comments.

Michael Mauboussin: Rob, this was fantastic. Thank you so much for having me on. These are such important topics, and I think we’re just at the beginning of figuring out how to use these tools effectively. It’s been a real pleasure.

Common Behavioral Biases in Investing and Mauboussin's AI-Aided Countermeasures

For more information on Michael and his writings, you are encouraged to visit is website: https://www.michaelmauboussin.com/

Disclaimer: The information contained in this newsletter is intended for educational purposes only and should not be construed as financial advice. Please consult with a qualified financial advisor before making any investment decisions. Additionally, please note that we at AInvestor may or may not have a position in any of the companies mentioned herein. This is not a recommendation to buy or sell any security. The information contained herein is presented in good faith on a best efforts basis.

He is an excellent writer.

Reading his book “More Than You Know” helped land me my first role in financial sales many many years ago.

Thanks a lot for this POV. Here are my reflections:

a. BAI were defined by Bill Miller already, adding T and making it ‘BAIT’, helps.

b. Liked the concept of ‘attention’ (from Informational edge) via Nvidia illustration - for me that creates an ‘event’, that leads to re-pricing, both ways. The ‘event’ could lead to a situation where ‘edge’ could come into play.

c. The potential to replicate 30 analyst efforts / wisdom of crowds, is AI creating a ‘Sparring bot’ for an investor / investing team (agent bot or even an “Agentic Mr. Market” - if we extended Guru Graham’s metaphor)

d. AI thinker @Balajis put it succinctly - “AI does it middle-to-middle”. He calls for prompting (pre)and verification(post) as the key. From the POV, the big ‘J’ judgement is the verification.

e. The pre-mortem is a ‘scaled’ version of Mr. Munger’s Inversion principle. Helps bring the adjacency of these 2 concepts.

f. The reference to ‘knowledge that builds up over time that you can’t even articulate how it manifests’ - I believe AI is setting the stage for faster / accelerated evolution through the bottom 3 levels in the hierarchy of competence, while simultaneously putting a premium on bringing into play the big “J” the unconscious competence (judgement).

Thanks again for the discussion /POV.